FrontRunners Methodology (Version 4: May 2020 - Present)

The Basics

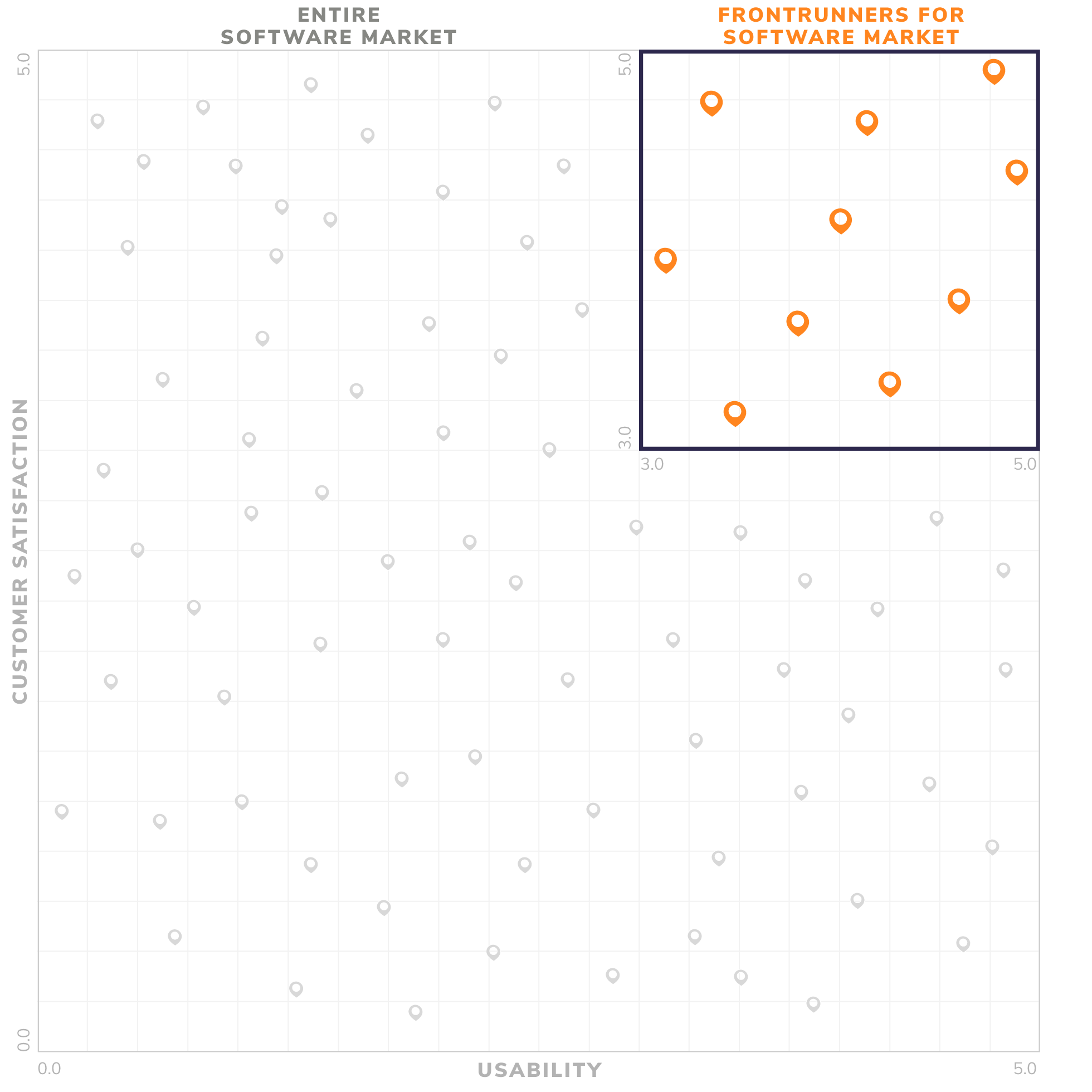

The FrontRunners methodology uses recent, published user reviews to score products on two primary dimensions: Usability on the x-axis and Customer Satisfaction on the y-axis.

Data sources include approved user reviews, public data sources and data from technology vendors. Please refer to the Software Advice Community Guidelines for more information.

Our FrontRunners reports use a snapshot of review data from a defined time frame and are not updated after publication. FrontRunners reports should therefore be used along with the detailed and current information available on each product’s profile page for the most up-to-date view of the data.

Inclusion Criteria

To be considered for inclusion in a category, products must meet all of the following criteria:

Product has at least 20 unique product reviews published on Software Advice within 24 months of the start of the research process for a given report. Two years of reviews provides a sample that we have determined is large enough and recent enough to be valuable to buyers. The criteria is also set low enough to ensure emerging vendors can be represented.

For FrontRunners Industry View reports, product has at least 5 product reviews from users in the concerned industry. These reviews should have been published on Software Advice within 24 months of the start of the research process for a given report.

Product shows evidence of offering required functionality as demonstrated by publicly-available sources, such as the vendor’s website.

Product serves North American users, as demonstrated by product reviews submitted from that region.

Product is relevant to software buyers across industries or sectors - in other words, no “niche” solutions that cater exclusively to one specific type of user, as determined by our analysis of user reviews and/or market research. - Not applicable for Industry View FrontRunners.

Product achieves a minimum normalized overall user-review rating, after application of the Software Advice FrontRunners method for normalizing and weighting review recency (see description under “Scoring” section).

Scoring

The Usability score (x-axis) is a weighted average of two user ratings from the last 24 months of the analysis period:

Functionality: End-user ratings on “Functionality.” This accounts for 50% of the total Usability score.

Ease-of-Use: End-user ratings on “Ease-of-use.” This accounts for 50% of the total Usability score.

The Customer Satisfaction score (y-axis) is a weighted average of three user ratings from the last 24 months of the analysis period:

Value for Money: End-user ratings on how valuable users consider the product to be relative to its price. This accounts for 25% of the total Customer Satisfaction score.

Likelihood to Recommend: End-user ratings on how likely they are to recommend the product to others. This accounts for 25% of the total Customer Satisfaction score.

Customer Support: End-user ratings on the product’s customer support. This accounts for 50% of the total Customer Satisfaction score.

User ratings are collected using various scales (usually 1 to 5 or 0 to 10); all ratings scales are translated to a standard 5-point scale before use in our calculations. Average product ratings are normalized for recency and volume of reviews. Scores are then translated from a 5-point scale to a 100-point scale.

Products can receive a maximum score of 100 on each axis. Products that meet a minimum score threshold for each axis are included as FrontRunners. The minimum score varies by category so that no more than 25 and no fewer than 10 products are included as FrontRunners. No product with a score less than 60 in either dimension is included in any FrontRunners graphic.

For products included, the Usability and Customer Satisfaction scores determine their positions on the FrontRunners graphic.

Only the top-scoring products appear in FrontRunners graphics.

FrontRunners Methodology (Version 3: June 2019 - April 2020)

The Basics

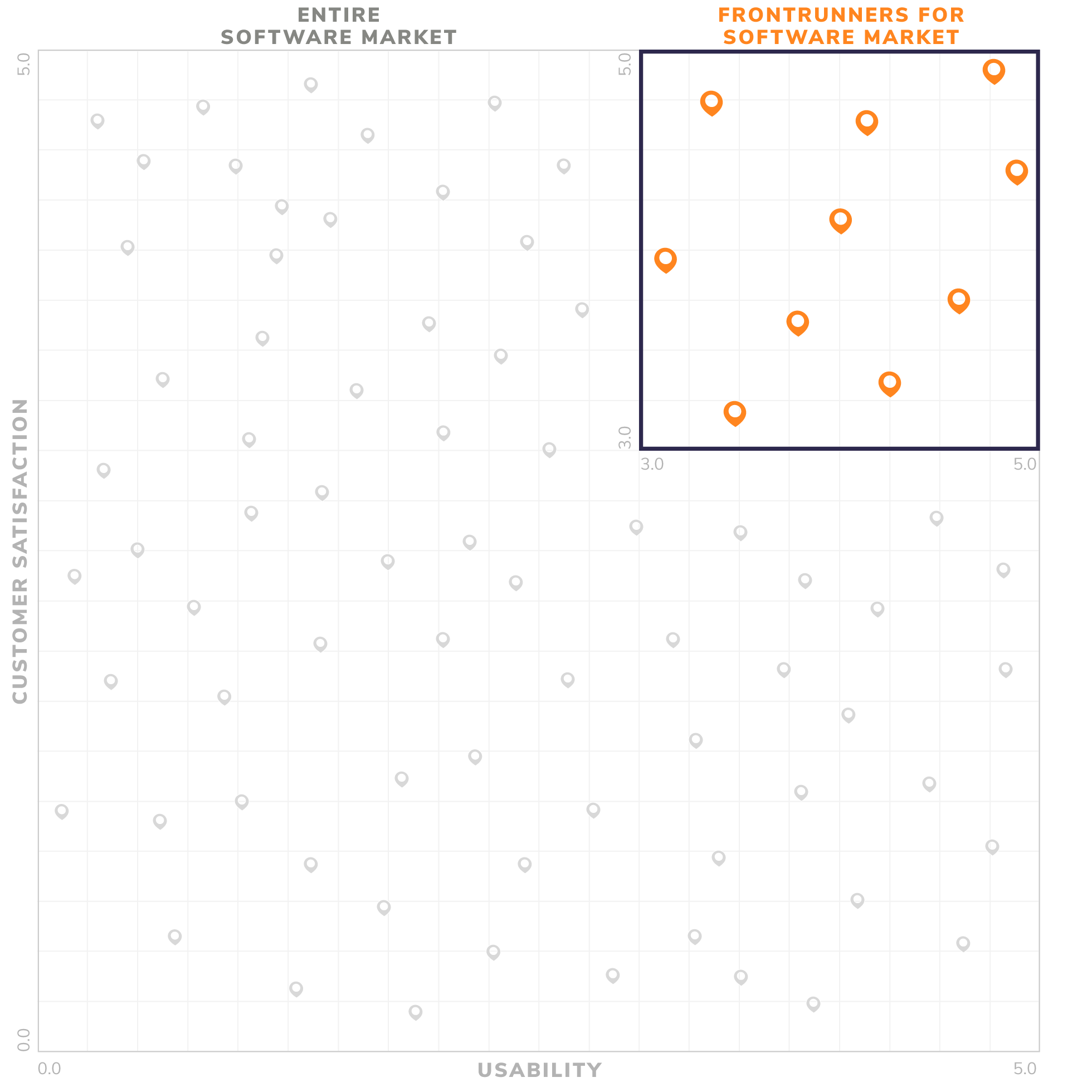

The FrontRunners methodology calculates a score based on published user reviews for products on two primary dimensions: Usability on the x-axis and Customer Satisfaction on the y-axis.

Eligibility

To be eligible for inclusion, products must:

Be sold in the North American software market.

Offer required functionality as determined by our research analysts, who provide coverage of and have familiarity with products in each category. Each product must have all core features plus a majority of common features for that market.

Have at least 20 unique user-submitted product reviews across the three Gartner Digital Markets web properties—softwareadvice.com, capterra.com, and getapp.com—published within 24 months of the start of the analysis period for a given report’s update, of which at least 10 are published in the most recent 12 months.

Since FrontRunners is intended to cover a given software category at large, the FrontRunners team use their market experience and knowledge, existing market-based research, and small business software buyer needs analysis to assess a product’s suitability for that category depending on whether it can reasonably be expected to be relevant to most software buyers across industries searching for a system in the category.

Scoring

The Usability score (x-axis) is a weighted average of two user ratings from the last 24 months of the analysis period:

Functionality: End-user ratings of one to five stars on “Functionality.” This accounts for 50% of the total Usability score.

Ease-of-Use: End-user ratings of one to five stars on “Ease-of-use.” This accounts for 50% of the total Usability score.

The Customer Satisfaction score (y-axis) is a weighted average of three user ratings from the last 24 months of the analysis period:

Value for Money: End-user ratings of one to five stars on how valuable users consider the product to be relative to its price. This accounts for 25% of the total Customer Satisfaction score.

Likelihood to Recommend: End-user ratings of one to five stars on how likely they are to recommend the product to others. This accounts for 25% of the total Customer Satisfaction score.

Customer Support: End-user ratings of one to five stars on the product’s customer support. This accounts for 50% of the total Customer Satisfaction score.

Products receive a score between one and five for each axis. Products that meet a minimum score for each axis are included as FrontRunners. The minimum score varies by category so that no more than 25 and no fewer than 10 products are included as FrontRunners. No product with a score less than 3.0 in either dimension is included in any FrontRunners graphic.

For products included, the Usability and Customer Satisfaction scores determine their positions on the FrontRunners graphic.

Only the top-scoring products appear in FrontRunners graphics.

Data

Data sources include published user reviews, public data sources and data from technology vendors. The user-generated product reviews data incorporated into FrontRunners is collected from submissions to all three Gartner Digital Markets sites (see above section for details). As a quality check, all reviews are verified and moderated prior to publication. Please refer to the Software Advice Community Guidelines for more information.

External Usage Guidelines

Providers must abide by the FrontRunners External Usage Guidelines when referencing FrontRunners content. Except in digital media with character limitations, the following disclaimer MUST appear with any/all FrontRunners reference(s) and graphic use:

FrontRunners constitute the subjective opinions of individual end-user reviews, ratings, and data applied against a documented methodology; they neither represent the views of, nor constitute an endorsement by, Software Advice or its affiliates.

Escalation Guidelines

We take the integrity of our research seriously. If you have questions or concerns about FrontRunners content, the methodology, or other issues, you may contact the FrontRunners team at methodologies@gartner.com. Please provide as much detail as you can, so we can understand the issues, review your concerns and take appropriate action.

FrontRunners Methodology (Version 2: May 2018 - May 2019)

The Basics

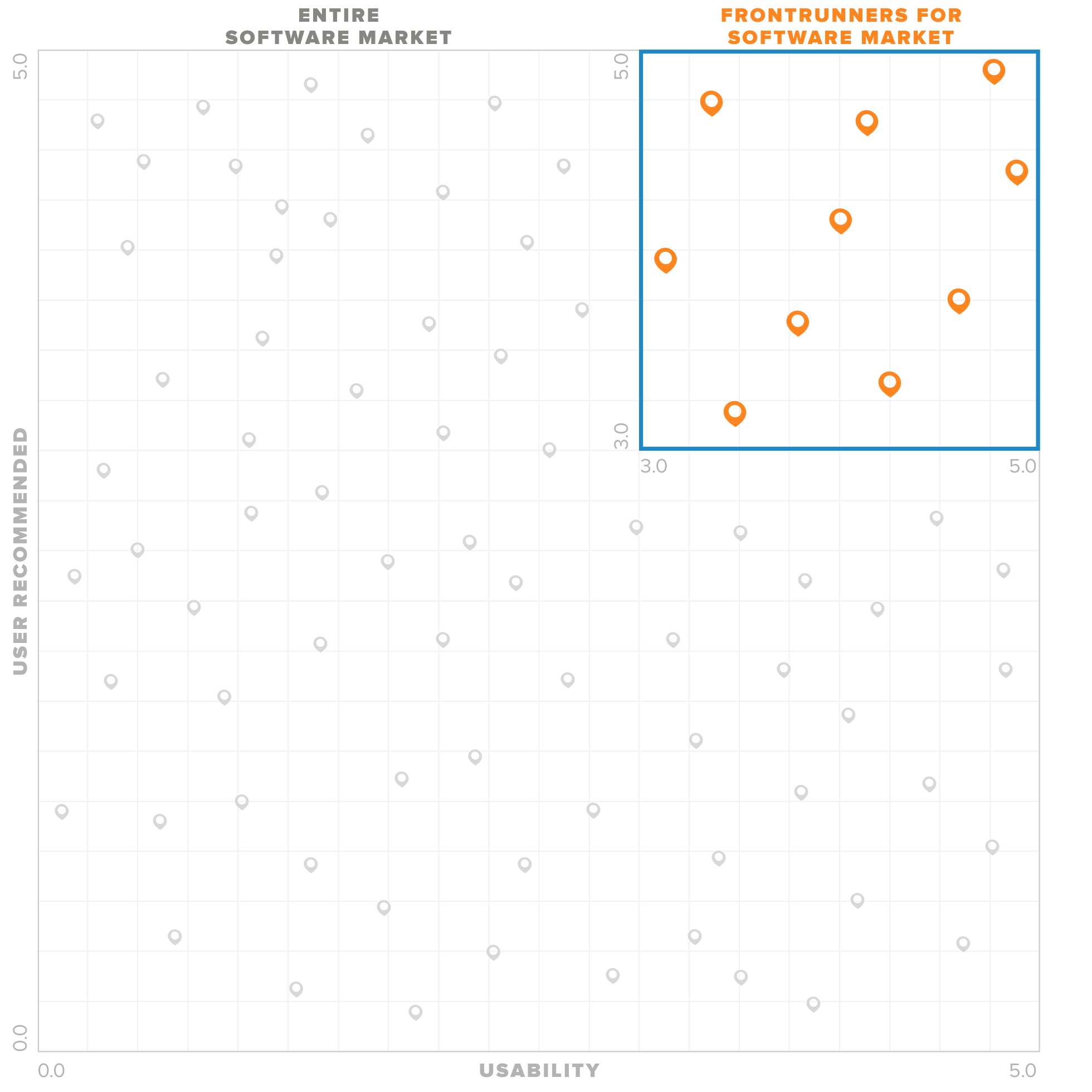

The FrontRunners methodology calculates a score based on published user reviews for products on two primary dimensions: Usability on the x-axis and User Recommended on the y-axis.

The Usability score is a weighted average of two user ratings:

End-user ratings of one to five stars on the product’s functionality.

End-user ratings of one to five stars on the product’s ease of use.

The User Recommended score is a weighted average of two user ratings:

End-user ratings of one to five stars on how valuable users consider the product to be relative to its price.

End-user ratings of one to five stars on how likely they are to recommend the product to others.

There are two FrontRunners graphics for each market, one “Small Vendor” and one “Enterprise Vendor” graphic. The “Small Vendor” graphic highlights qualifiers from smaller (by employee size) vendors, while the “Enterprise Vendor” graphic displays qualifiers for larger (by employee size) vendors.*

Products that meet a market (or product category) specific minimum score for each axis for their respective size group (Small Vendor or Enterprise Vendor) are included as FrontRunners in their respective size group. The minimum score varies so that no more than 15 and no fewer than 10 products are included in any FrontRunners graphic.

The minimum score cutoff to be included in the FrontRunners graphic varies by category, depending on the range of scores in each category. No product with a score less than 3.0 in either dimension is included in any FrontRunners graphic. For products included, the Usability and User Recommended scores determine their positions on the FrontRunners graphic.

For each individual rating in both the Usability and User Recommended criteria, the methodology weighs recent reviews more heavily.

Eligibility

Markets are defined by a core set of functionality, and to be eligible for FrontRunners, products must offer that core set of functionality. Core functionality required is determined by our research analysts, who provide coverage and have familiarity with products in that market. Additionally, a product must have at least 20 unique user-submitted product reviews across the three Gartner Digital Markets web properties: softwareadvice.com, capterra.com and getapp.com, which must be published within 18 months of start of the analysis period.

Inclusion in a “Enterprise Vendor” or “Small Vendor” FrontRunners graphics is based on the vendor’s employee count. Vendors eligible for the “Enterprise Vendor” graphic must have more than the median employee count for all vendors in the market, or 100 employees—whichever is greater. Vendors whose employee counts do not meet either of those thresholds qualify for the “Small Vendor” graphic.

In cases where fewer than 10 products qualify to be on either a “Small Vendor” and “Enterprise Vendor” FrontRunners graphic in a given market, the market will have only one graphic combining both enterprise and small vendors, which are color coded to show distinction between size.

Data

Data sources include published user reviews, public data sources and data from technology vendors. The user-generated product reviews data incorporated into FrontRunners is collected from submissions to all three Gartner Digital Markets sites (see above section for details). As a quality check, we ensure the reviewer is valid, that the review meets quality standards and that it is not a duplicate.

The employee count data comes from public sources, collected by Gartner associates. As a quality check, we compare this data against data submitted by the providers. We use this data to place a product in either the or “Small Vendor” or “Enterprise Vendor” version of the graphic. Employee size has no bearing on a product’s inclusion in the graphics beyond determining which version the product qualifies for.

External Usage Guidelines

Providers must abide by the FrontRunners External Usage Guidelines when referencing FrontRunners content. Except in digital media with character limitations, the following disclaimer MUST appear with any/all FrontRunners reference(s) and graphic use:

FrontRunners constitute the subjective opinions of individual end-user reviews, ratings, and data applied against a documented methodology; they neither represent the views of, nor constitute an endorsement by, Software Advice or its affiliates.

Terms and Conditions

Vendors should submit only publicly available information and should not submit any information that they deem to be confidential.

*In the event fewer than 10 products qualify for either a “Small Vendor” or “Enterprise Vendor” FrontRunners graphic, the vendors that do qualify will be combined into a single graphic.

FrontRunners Methodology (Version 1: September 2016-May 2018)

The Basics

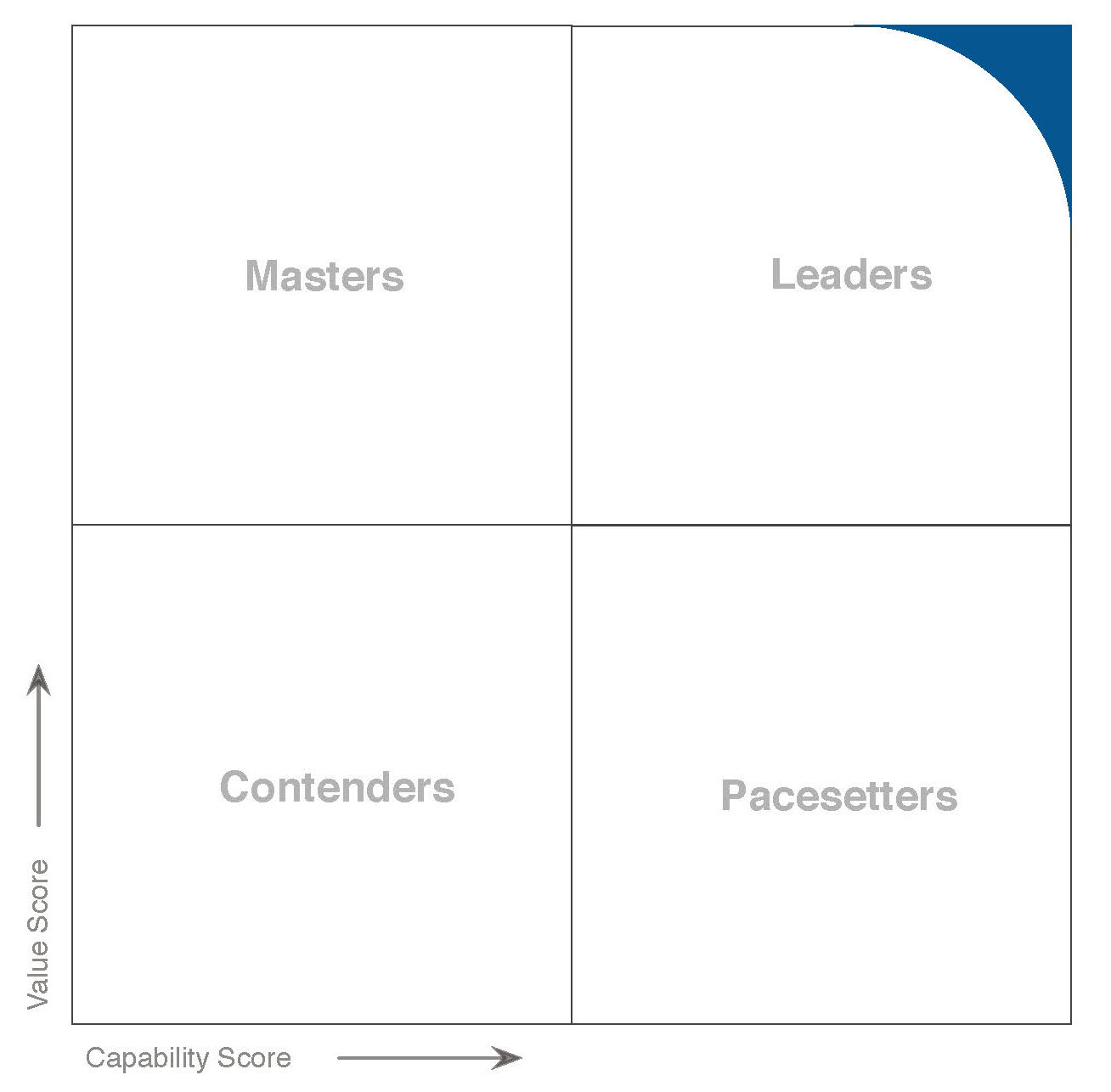

The FrontRunners methodology assesses and calculates a score for products on two primary dimensions: Capability on the x-axis and Value on the y-axis.

The Capability score is an overall weighted average of scores including:

End-user ratings of one to five stars on the product’s functionality.

End-user ratings of one to five stars on the product’s ease of use.

End-user ratings of one to five stars on the product’s customer support.

A score, relative to other products in the market, for the product's inclusion of key functionality for the software category.

A score, relative to other products in the market, representing the number of other products that integrate with it.

The Value score is an overall weighted average of scores including:

End-user ratings of one to five stars on overall satisfaction with the product.

End-user ratings of one to five stars on how valuable users consider the product to be relative to its price.

End-user ratings of one to five stars on how likely they are to recommend the product to others.

A score, relative to other products in the market, for the size of the product's customer base.

A score, relative to other products in the market, for the number of professionals in the market who have experience with the product (e.g., users, developers, administrators).

A score, relative to other products in the market, representing the total number of user reviews across the three Gartner web properties.

A score, relative to other products in the market, representing the average number of times per month internet users search for the product on Google.

Markets are defined by a core set of functionality, and products considered for, and included in, FrontRunners must offer that core set of functionality. Additional related functionality can contribute to the capability score for a product. To qualify for consideration in a FrontRunners graphic, a product must have a minimum number of unique, user-submitted product reviews across the three Gartner Digital Markets web properties: softwareadvice.com, capterra.com and getapp.com. The minimum number of reviews required per product may differ by category, but will generally be between 10 and 20 unique reviews.

More Methodology Details

The FrontRunners methodology assesses products on two primary dimensions: Capability on the x-axis and Value on the y-axis. Products receive a score between one and five for each axis. Products that meet a minimum score for each axis are included as FrontRunners. The minimum score cutoff to be included in the FrontRunners graphic varies by category, depending on the range of scores in each category. For products included, the Capability and Value scores determine their positions on the FrontRunners graphic.

The Capability score is based on three criteria: user ratings on capability, a functionality breadth analysis, and a business confidence assessment.

1. The capability user ratings criterion captures user satisfaction with the product’s capabilities. The capability ratings score is a weighted average of the one- to five- star rating scores from three user ratings:

a. Functionality

b. Ease of use

c. Customer support

2. The functionality breadth analysis is based on:

a. The product’s coverage of core software category functions

b. The number of other products it integrates with, along with the number of other products that state they integrate with it

For each of these data points, the methodology calculates the percentile ranking for each product relative to all other products in the software category that have qualified for FrontRunners consideration. That percentile ranking is then translated into a one to five score.

3. The business confidence assessment is an indicator of whether the software company will likely continue to invest in the product for the next 12-18 months. The analysis is based on four data points:

a. The product’s current customer base

b. The annual growth rate of the product’s customer base

c. The vendor’s current employee base

d. The annual growth rate of the vendor’s employee base

If the company's size and product's customer base are both significant and growing, then the likelihood that the business will invest in the product is higher than in the alternative scenarios. For each of these four data points, the methodology calculates the percentile ranking for each product relative to all other products in the software category that have qualified for FrontRunners consideration. That percentile ranking is then translated to a one to five score.

The overall one to five Capability score is a weighted average of the scores for user ratings, functionality breadth and business confidence.

The Value score is based on two criteria: user ratings on value and product adoption.

1. The value user ratings criterion captures users' satisfaction with the business value provided by the product. The value ratings score is a weighted average of the one to five star rating scores from three user ratings:

a. Overall ratings of the product.

b. How likely users are to recommend the product to others.

c. How valuable users consider the product to be relative to its price.

2. The product adoption data analysis assesses if the product is positioned in the market as more of an industry standard with higher adoption (thus earning a higher score), or as an emerging competitor with more limited adoption (thus earning a lower score). The product adoption methodology analysis for each product is based on four data points:

a. The size of the product's customer base.

b. The number of professionals in the market who have experience with the product (e.g., users, developers, administrators).

c. The total number of user reviews across the three Gartner web properties.

d. The average number of times per month internet users search for the product on Google.

For each of these four data points, the methodology calculates the percentile ranking for each product relative to all other products in the software category that have qualified for FrontRunners consideration. That percentile ranking is then translated into a one to five score.

The overall one to five Value score is a weighted average of the scores for value user ratings and product adoption.

Data

Data sources include user reviews and ratings, public data sources and data from technology vendors. The user-generated product reviews data incorporated into FrontRunners is collected from submissions to all three Gartner Digital Markets sites (softwareadvice.com, capterra.com and getapp.com). As a quality check, we ensure the reviewer is valid, that the review meets quality standards and that it is not a duplicate.

The business confidence and product adoption data comes from public sources, collected by either a third-party data provider or by Gartner associates. As a quality check, we compare this data against data submitted by the providers. We use this data to calculate a product's percentile ranking, which allows us to determine how products compare relative to one another rather than determine an absolute number.

The functionality breadth data is collected from the technology providers. We check the data provided and challenge data that seems inflated or unlikely. We use this data to calculate a product's percentile ranking, which allows us to determine how products compare relative to one another rather than determine an absolute number.

Terms and Conditions

Qualifying vendor's corporate logo is used to identify its position in the FrontRunners graphic. Qualifying vendor grants to Software Advice a non-exclusive, worldwide license to use its corporate logo for this purpose, provided that such use (i) adheres to qualifying vendor’s standard approved format; and (ii) is limited to the Software Advice website and related FrontRunners materials.

Vendors should submit only publicly available information and should not submit any information that they deem to be confidential.

Information for Vendors About FrontRunners

External Usage Guidelines

Providers must abide by the FrontRunners External Usage Guidelines when referencing FrontRunners content. Except in digital media with character limitations, the following disclaimer MUST appear with any/all FrontRunners reference(s) and graphic use:

FrontRunners constitute the subjective opinions of individual end-user reviews, ratings, and data applied against a documented methodology; they neither represent the views of, nor constitute an endorsement by, Software Advice or its affiliates.

Escalation Guidelines

We take the integrity of our research seriously. If you have questions or concerns about FrontRunners content, the methodology, or other issues, you may contact the FrontRunners team at methodologies@gartner.com. Please provide as much detail as you can, so we can understand the issues, review your concerns and take appropriate action.

Tentative Publication Schedule

Please visit our full publication schedule here. Note: This schedule is intended to be used as a guide only and is subject to change at any time at our discretion.

Frequently Asked Questions

Do software and SaaS providers need to notify you if they’d like their product(s) to be considered?

No, we will automatically consider all products with profiles on our site and that meet our eligibility criteria in a given category.

Do you need any additional information from providers to create the reports?

No, at this time we don’t need nor accept any information directly from our software and SaaS provider community. We obtain all the information we need via our internal databases and publicly available data.

Does it matter which of the Gartner Digital Markets sites — Capterra, GetApp or Software Advice — reviews are published on?

No, reviews are shared across all three sites and all are considered equally in our research.

When will you publish [x] category next?

Please refer to our research calendar for a tentative schedule of reports by month and category. Note: This schedule is intended to be used as a guide only and is subject to change at any time at our discretion.

Are software and SaaS providers running paid campaigns with Software Advice more likely to place higher on reports?

No. A provider's status as a client or non-client is not a factor in any way.

How can providers improve their products’ placement on reports?

While software and SaaS providers cannot directly influence their position in reports, the best way to ensure a product is considered is to collect more reviews.

Can you update a product’s placement in a recent report if that product recently received a number of new reviews?

Currently, our reports are published snapshots in time as denoted by the specific publish date on the report. We do not make changes after they have been published unless there is a significant error in its production. Any such products and new reviews will be considered when we next update the report. Please refer to our research calendar for our tentative schedule of reports by month and category.