ETL vs. ELT: How to Choose the Best Approach for Your Data Warehouse

The data explosion has put a massive strain on data warehouse architecture. Organizations handle large volumes and different types of data, including sensor, social media, customer behavior, and big data.

If your organization has a data warehouse, you’re most likely using either the extract, transform, load (ETL) or the extract, load, transform (ELT) data integration method. ETL and ELT are two of the most popular methods of collecting data from multiple sources and storing it in a data warehouse that can be accessed by all users in an organization.

ETL is the traditional method of data warehousing and analytics, but with technology advancements, ELT has now come into the picture. But what happens when “T” and “L” switch places? Let’s discuss.

What is the difference between ETL and ELT?

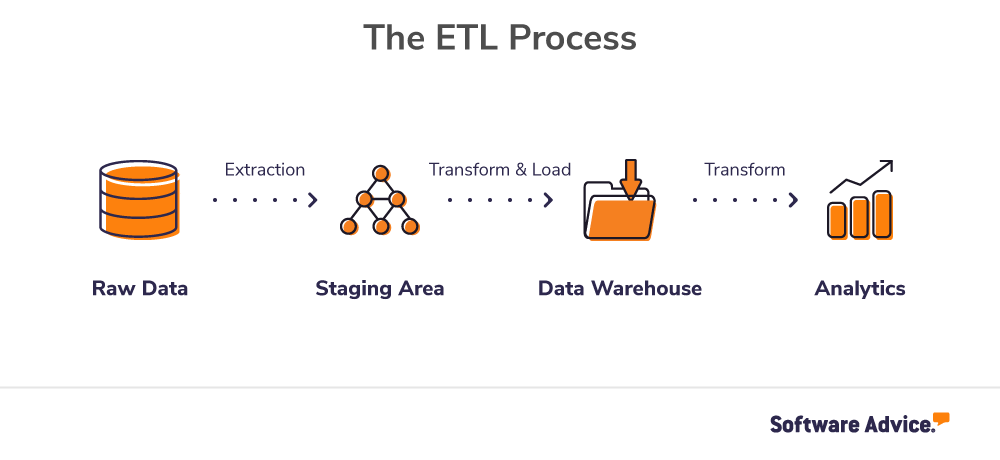

In ETL, data is extracted from disparate sources such as ERP and CRM systems, transformed (calculations are applied, raw data is changed into the required format/type, etc.), and then uploaded to the data warehouse, also called the target database.

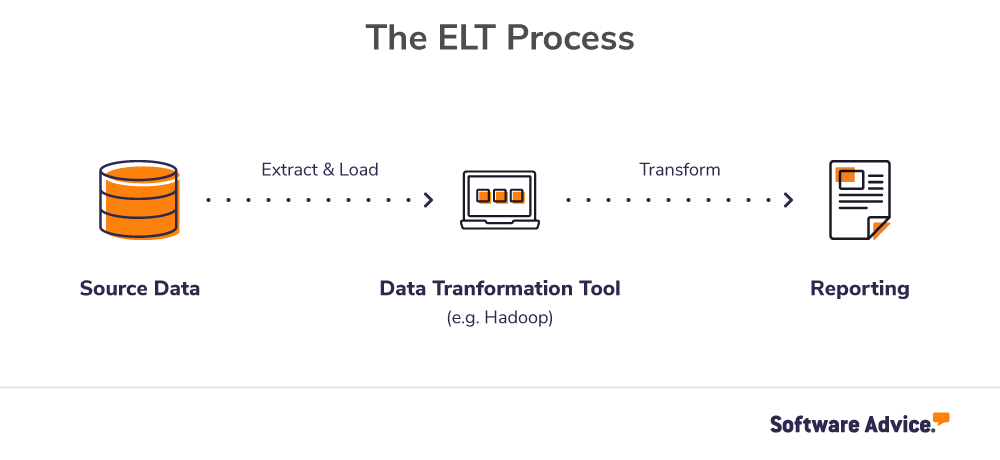

In ELT, after extraction, data is first loaded in the target database and then transformed; data transformation happens within the target database.

That said, the difference between these two processes isn’t just confined to the order in which data is integrated. To understand their differences, you also have to consider:

The underlying storage technologies

The design approach to data warehouse architecture

The business use cases for the data warehouse

The image below explains the different business scenarios suitable for the ETL and ELT data integration methods.

Find more about what ELT vs. ETL implies for data warehouse integration with this pizza analogy:

Everything hinges on the “T” in ETL and ELT

Data transformation is the most complex step in the ETL and ELT processes. In this step, ETL and ELT differ in two major aspects:

When the data transformation is done

Where the data transformation is done

ETL tools help integrate data to meet the requirements of traditional data warehouses that are powered by online analytical processing (OLAP) data cubes and/or relational database management system (RDBMS) technologies. OLAP tools and structured query language (SQL) queries depend on the standardization of dimensions across data sets to deliver aggregate results. This means that data must go through a series of transformations, such as:

Conversion of numerical values

Editing of text strings

Matching of rows and columns

Finding and replacing of data items

Changing of column names

Recombining of columns from different tables and databases

Precalculation of intermediate aggregates

For traditional data warehouses, these transformations are performed before loading data into the target system, typically a relational data warehouse. This is the process followed in ETL. However, with the evolution of underlying data warehousing storage and processing technologies such as Apache Hadoop, it has become possible to accomplish these transformations within the target system after loading the data, which is the process followed in ELT.

Both ETL and ELT involve staging areas. In ETL, the staging area is within the ETL tool, be it proprietary or custom-built. It sits between the source and the target system, and data transformations are performed here. In contrast, with ELT, the staging area is within the data warehouse, and the database engine powering the database management system performs the transformations.

One of the immediate consequences of this aspect is that in ELT you lose the neat visual interface and data preparation/cleaning features that ETL tools provide. Also, transformations in Hadoop are written by Java programmers, so you might need them in your IT team for maintenance purposes. This means that if your IT department is short on Java programmers to perform custom transformations, ELT may not be right for you.

Despite these challenges, should you move to ELT? Are there any advantages in doing so? To answer these questions, we’ll take a closer look at the characteristics of the target systems used in the ELT process.

Hadoop and advanced data integration tools enable ELT

Tools such as Apache Hadoop have renewed the interest of businesses in ELT. Previously, large data sets were divided into smaller ones, processed and transformed remotely, and then sent to the data warehouses. With Hadoop integration, large data sets that used to be circulated around the cloud and processed can now be transformed in the same location, i.e, within Hadoop.

ELT is a good option if you’re moving to a data warehousing structure for supporting big data initiatives using Hadoop or a NoSQL analytical DBMS.

The ETL process feeds traditional warehouses directly, while in ELT, data transformations occur in Hadoop, which then feeds the data warehouses. Hence, poor quality data or data that requires substantial integration shouldn’t be loaded into Hadoop, unless you have a team of highly skilled programmers to write custom codes for complex data transformations.

Data sets loaded into Hadoop during the ELT process can be relatively simple yet massive in volume, such as log files and sensor data. In other cases, you can load highly unstructured data, such as tweets for sentiment analysis, that don’t require extensive upfront transformations.

You can think of Hadoop as “a sandbox for a big data environment” in which your analysts can play around instead of treating it as a straight-up replacement for a data warehouse.

The bottom line

Here are some quick final thoughts about ETL and ELT:

ETL is outdated. It works with traditional data center infrastructures, which cloud technologies are already replacing. The loading time takes hours, even for businesses with data sets that are just a few terabytes in size.

ELT is the future of data warehousing and efficiently utilizes current cloud technologies. It allows businesses to analyze large data sets with lesser maintenance and offers key insights to help make the right business decisions. With time, the scope of ELT will potentially expand as native data integration tools for Hadoop and NoSQL solutions continue to evolve.

Software Advice features a catalog of end-to-end business intelligence (BI) platforms that can help integrate your business data. Check it out now!

If you need help in choosing a specific BI tool, our advisors are here for you. They provide free, fast, and personalized software recommendations, helping businesses of all sizes find software that meets their specific business needs. Schedule an appointment with an advisor here.