Best Construction Categories

Best Facilities Management Categories

Best Human Resources Categories

Best Legal Management Categories

Best Manufacturing Categories

Best Medical Categories

Best Property Management Categories

Get 1-on-1 advice in 15 minutes. It's free.

Josh P.

AI Adoption in Medical Practices: Drivers, Barriers, and ROI Realities

AI adoption in medical practices is growing steadily, with most providers seeing positive ROI, but progress depends on overcoming integration, skills, and trust barriers to focus AI where it delivers real clinical and operational impact.

As the healthcare industry continues to embrace digital transformation, the benefits of AI in healthcare—from improving workflows to enhancing patient outcomes—are becoming clear. Medical practices have been using AI-enabled tools to meet demanding operational goals and optimize workflows.

While AI is indeed playing a significant role in so many practices, a close look at exactly how it's doing that can help decision-makers and executive leaders understand what they have to do to keep up with the competition.

The Software Advice’s 2026 Medical Software Trends survey* provides this crucial perspective. Our survey collected responses from 400 U.S.-based healthcare providers, spanning a range of practice types and sizes. The respondents also hailed from a variety of specialties, providing a well-rounded, general snapshot of how AI is playing a role in healthcare in 2026.

This survey shaped our report, which is aimed at helping healthcare leaders and IT decision-makers navigate a difficult choice: How to use AI-powered medical software without overspending on features that may not bring adequate ROI.

Key findings

There are high expectations regarding AI adoption. Almost half (49%) of healthcare providers say their expectations for AI's potential have risen in the past year.

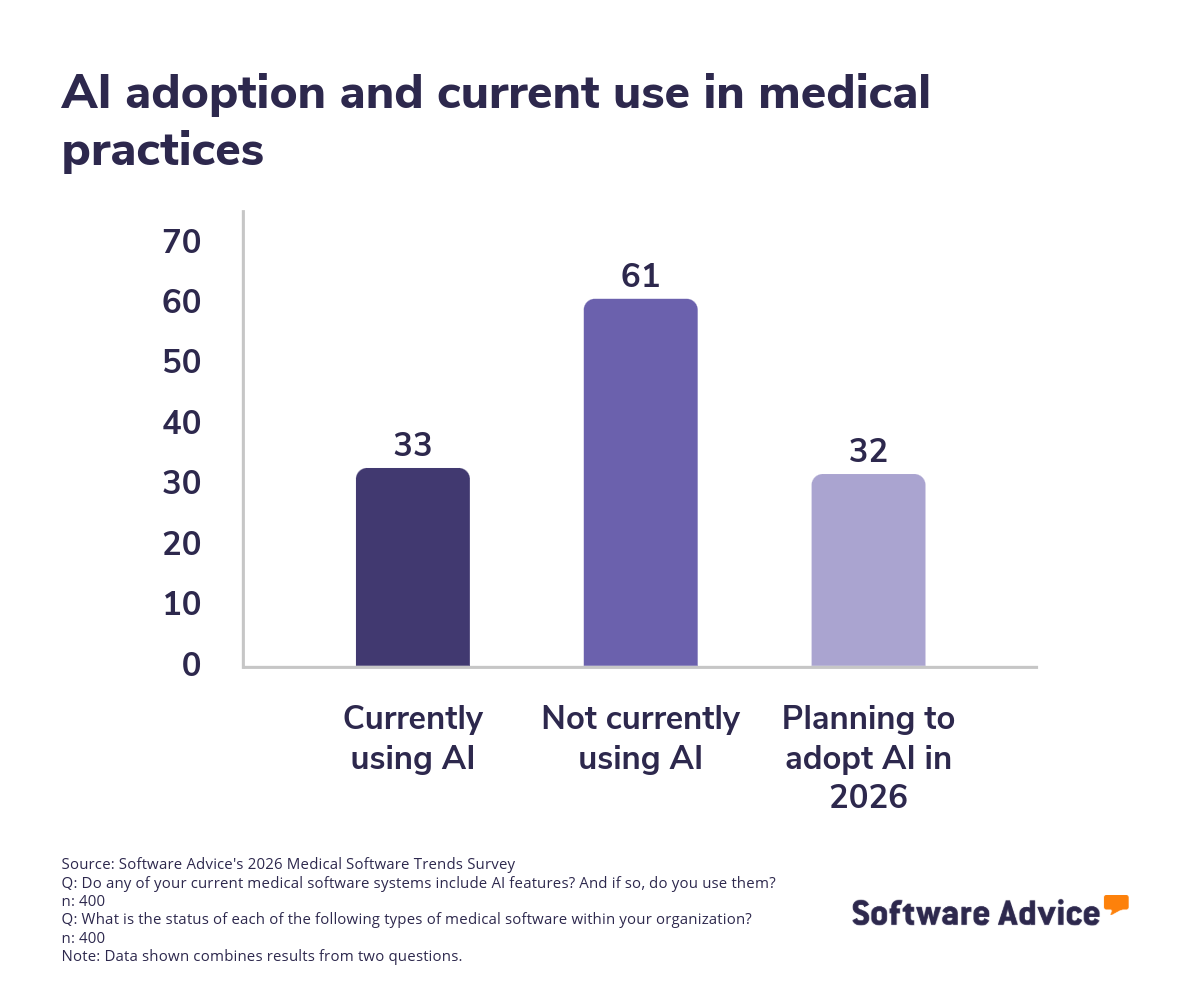

Current AI usage is only moderate. Only one-third (33%) of practices already use medical software with AI features.

Natural language processing (NLP) and large language models (LLMs) are leading the way. A total of 42% of those who use AI turn to NLP for processing documentation, and 36% use LLMs.

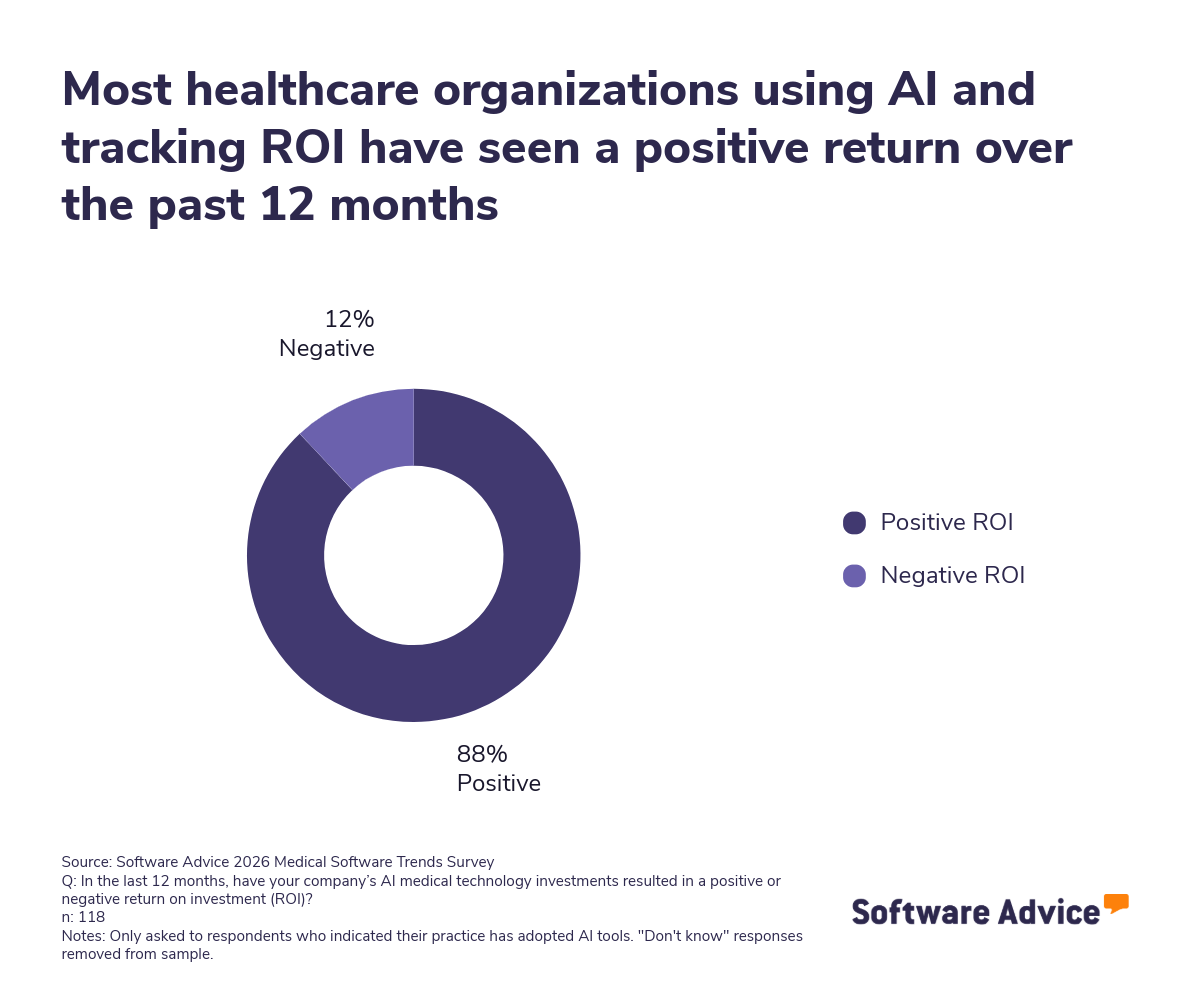

ROI is generally positive. AI adopters report positive returns 88% of the time.

Respondents feel AI’s potential lies in supporting clinical decisions. More than half (52%) of respondents say AI will have the most significant impact on improving clinical decision-making.

The state of AI adoption in medical practices

AI adoption in medical practices is growing gradually. At the same time, the rate at which medical practices adopt AI varies. Understanding adoption trends is essential for realizing the benefits of AI in healthcare, such as improved diagnostics and streamlined administration.

According to our survey, one-third of practices are already using AI features. This doesn’t mean the rest are letting AI pass them by; another 32% plan to adopt AI and analytics tools over the next year. The combination of practices using AI and those planning to use it signals its growing momentum.

How practices are using AI

The AI tools practices are turning to cover a wide range of applications:

Clinical decision support systems (CDSS). A total of 31% of practices are using AI for CDSS. These systems provide clinicians with evidence-based guidance to improve diagnostic accuracy. They also recommend treatment options and flag potential patient risks.

NLP. A sizable proportion (42%) of AI-enabled practices use NLP to automate the documentation process and transcribe physician notes. It can also extract structured data from unstructured text. When used in combination, these features can significantly reduce administrative workload.

LLMs. Among practices that use AI, 36% rely on LLMs. These tools help draft clinical notes and communicate with patients. They can also parse information and retrieve essential data to make decisions.

Virtual assistants and chatbots. About 30% of respondents report using these tools. They streamline patient engagement by handling routine queries. They can also improve communication by interacting with patients after hours when clinicians are asleep.

Predictive analytics. Despite the power of predictive analytics, only about 21% of practices are using them to improve patient outcomes. Those who do are using them for things like stratifying risk, calculating the likelihood of a patient being readmitted, or performing other functions, such as being more proactive in care management.

AI diagnostic imaging tools. 20% use AI imaging tools in radiology, pathology, or another discipline. These can detect abnormalities quickly and help clinicians better interpret scans.

Differences in adoption rates according to size

The ways in which practices are using AI point to a clear trend: Operational needs dictate which tools are prioritized.

Larger practices and multi-specialty groups were more likely to adopt AI than their smaller counterparts. Economies of scale play a role because having more patients justifies the investment in more expensive tools to serve them.

Smaller practices may begin with more accessible AI solutions, such as NLP. The features they use may be relatively commonplace outside the healthcare world, such as chatbots and generative AI-powered reports.

Practices with targeted specialties are also turning to AI more often. Radiology and oncology practices, for example, are taking the lead in AI-powered diagnostic imaging. On the other hand, primary care providers are more likely to use CDSS and virtual assistants.

Drivers of AI adoption

Medical practices are turning to AI-powered software to meet their most important operational needs. According to survey data, one of the most impactful drivers seems to be increased trust and hope: 49% of healthcare providers report their expectations have grown in the past year.

But the desire to trust AI to play a greater role isn’t unfounded. There are clear triggers for adoption, such as:

A need for more efficient workflows. Practices are using AI tools to make workflows faster and smoother. They automate tasks that employees used to perform manually. They also use AI to help clinicians make better decisions faster.

Augmenting existing software with AI tools. Many healthcare organizations are turning to AI upgrades. Instead of buying new software, they pay to upgrade their existing software by adding AI features. For example, they add AI functionality into an electronic health record (EHR) solution to enhance practice management.

Enhancing integration within software stacks. Practices are using AI to make their software more interoperable. AI can quickly grab and interpret data from multiple sources, synthesizing it to help clinicians improve treatment.

Leading AI use cases

The most common use cases for AI include:

Supporting clinical decisions. Out of those who use AI, 52% say that using it to predict CDSS will be the most important way of using AI over the next 12 months. AI can give clinicians insights into patient needs, as well as ensure that a clinician’s decisions conform to compliance guidelines.

Diagnostics and imaging. AI-driven imaging tools are helpful in radiology and pathology departments because they can detect anomalies faster and with greater accuracy than humans. At the same time, there’s room for growth because only 20% are currently using AI in image-based diagnostics.

Documentation and administration. LLMs and NLP models can automate transcriptions and turn clinical notes into effective documentation. While these AI tools are commonly used in generative AI in everyday life, only around 42% of AI adopters use NLP in medical practices, and only 36% use LLMs to generate notes and communicate with patients.

Patient engagement. Chatbots and virtual assistants enable patients to schedule appointments, receive reminders, and access important information. A total of 30% of adopters use these tools to better interact with patients.

Predictive analytics. Around 21% of respondents use predictive analytics to anticipate patient outcomes and forecast their needs. This highlights the room for growth in this application of AI, which can have a dramatic effect on patient outcomes.

Barriers to AI adoption

On the other side of the coin, a surprising 61% of practices aren’t using AI at all. This is due to a variety of barriers, such as:

Fear of an over-reliance on AI. More than a third (37%) said they were afraid their staff might start over-depending on AI instead of using their human, professional judgement.

Privacy, security, and compliance concerns. 28% of respondents said they were worried about privacy and security issues, as well as regulatory compliance concerns. Running afoul of HIPAA regulations can have dire consequences, resulting in hefty fines. Some practices are hesitant to put data-laden functions in the hands of AI because a mistake there could be very detrimental.

Skills gaps. A quarter (25%) cite insufficient AI skills as a primary barrier. For many employees, learning how to use AI is challenging, especially if they are already used to traditional workflows.

Integration challenges. 24% of respondents report struggling with integrating AI-powered software into their existing stack. This can be difficult, particularly if a non-AI-powered solution already integrates well enough.

Limited evidence of clinical effectiveness. 21% said they hadn’t seen adequate proof of the clinical efficacy of AI solutions to start using them.

Employee resistance. Cultural resistance can easily undermine AI adoption, and 19% of respondents cited employee skepticism as a significant barrier. One factor making employees skeptical is the lack of transparency in AI algorithms. It’s hard to trust something if you don’t know how it works.

Measuring ROI: Realities and gaps

The same 2026 Software Advice survey reveals that while many practices have adopted AI, not all are measuring its financial or operational impact.

Among the 232 respondents whose practices have adopted AI and who are tracking ROI:

A total of 88% report positive ROI over the past 12 months.

Only 12% report a negative ROI. This is typically due to underutilization or integration issues. Insufficient training on new AI-powered tools is another factor putting a damper on achieving positive ROI.

The factors driving positive ROI are diverse:

Efficiency gains. Automating routine tasks like documentation and appointment scheduling frees up staff to focus on other work. NLP, LLMs, and chatbots are particularly effective in lifting the burden off administrators’ shoulders.

Improved accuracy. CDSS systems help reduce errors in diagnosis and treatment recommendations. They also reduce medication-ordering errors.

Stronger compliance. Accurate, evidence-based guidance makes it easier to stick to regulatory requirements.

Better patient outcomes. Predictive analytics and AI-assisted diagnostics enable earlier detection of risk factors. They can also pinpoint how and when diseases are progressing. Using this data, clinicians can be more proactive when making healthcare decisions.

At the same time, many practices struggle with measuring ROI. This is often due to:

Uncertainty about metrics. It can be difficult to quantify how AI tools are actually impacting patient outcomes or administrative efficiency.

Lack of adequate data infrastructure. Without the ability to track clinical and operational metrics, it’s hard to measure efficiency gains.

Limited experience with evaluating technology. Some practices may lack staff with expertise in evaluating the ROI of technology.

Recommendations for healthcare leaders

Leveraging AI takes a lot more than just buying the software. Medical practices have to plan how they’re going to implement each solution. They also have to ensure they have staff buy-in to each AI implementation. Additionally, healthcare leaders need to establish governance for the use of AI to reduce the risk of overuse or misuse—particularly when it harms patient outcomes.

To help optimize AI usage, here are some tips to follow:

Use training and education to target skills gaps

Since some respondents report insufficient AI skills as a barrier, practices should get their staff the training they need to feel confident using AI tools. Some examples of effective training include:

Tutorials about how to use AI features

Workshops that teach participants how to interpret AI outputs to improve treatments

Regular professional development that focuses on AI literacy

Improve the availability and quality of data

AI can’t deliver adequate results without high-quality data. With 32% of respondents citing poor data quality as a challenge, this needs to be a focal point. To ensure data meets the needs of AI-powered systems, leaders should:

Enact standardized data entry protocols. This could also be accomplished using AI-powered data transformation, if available.

Use highly structured formats, such as coding systems, to categorize diagnoses or templates that describe symptoms.

Continually check for inconsistencies in how data is gathered and entered.

Improve privacy, security, and compliance systems

The percentage of respondents citing privacy and compliance issues (28%) is even higher than the number worried about skills gaps. This presents an important opportunity for leaders. By establishing compliance and privacy mechanisms, you can help staff feel more comfortable assigning data-dependent tasks to AI-powered systems.

Otherwise, you run the risk of making staff feel they may be endangering the privacy of patients or even the financial standing of the practice due to the risk of fines.

Improve ROI measurement

Better ROI tracking results from measuring both the direct and indirect benefits of AI adoption.

Here’s an example of a direct benefit:

Doctors can detect a greater percentage of malignant tumors using AI-powered imagery analysis

Here are some examples of indirect benefits:

Patient satisfaction scores go up because the administrative staff can process intake forms quickly

Employee turnover drops because AI tools make daily tasks easier, reducing stress

Both direct and indirect benefits can be assessed using key performance indicators (KPIs). In many cases, this starts with establishing a baseline and a timeframe, often with a KPI that you’ve yet to measure.

For instance, perhaps the level of frustration felt by billing staff while working with accounts receivable hasn’t been measured yet. Prior to implementing AI-powered form completion, you could conduct a survey to gather input on frustration levels. Then a similar survey could be conducted six months after implementing your AI solution.

Future outlook

The future outlook for the use of AI in medical practices is bright—but not because of its current widespread adoption. Rather, the fact that there’s so much room to grow, combined with stats around how practices plan to use AI more, points to deeper and broader use over the next few years.

For example, 88% are reporting positive ROI over the past 12 months, so the monetary benefits are compelling. At the same time, even though only 42% report using analytical tools, 32% say they plan to adopt them in the near future. Therefore, both the motivation to use AI tools more and the monetary benefits are in place.

At the same time, it’s important to gauge and account for pitfalls associated with AI. For instance, AI may also hurt traditional healthcare. A total of 24% of respondents say patients using generative AI (GenAI) to self-diagnose and/or misinformation is a top challenge for the next 12 months.

The key for medical practices will be to continually embrace AI and make it part of their everyday workflows. As patients become increasingly accustomed to AI-powered experiences in their everyday lives, they’ll come to expect them when they go in for treatment or diagnosis. By meeting their needs, medical practices help earn their trust—and patronage—as well as increasing efficiency along the way.

Survey methodology

Software Advice’s 2026 Medical Software Trends Survey was conducted online in September 2025 among 400 physicians in the U.S. employed full-time in medical practices. The goal of this study was to understand the timelines, organizational challenges, research behaviors, and adoption processes of medical software buyers. Respondents were screened to ensure they were involved in medical software purchasing decisions. The study included 134 small practices (1-5 licensed providers), 144 medium practices (6-20 licensed providers), and 122 large practices (more than 20 licensed providers).